Development

Mobile

Working with mobile since 2004. Starting with games on Java and Brew handsets. Moving to the iPhone in 2008.

I’ve shipped over a dozen mobile titles. Three titles Doodle Jump, EA’s Pogo games, and Surviving High School each hit #1 on the app store.

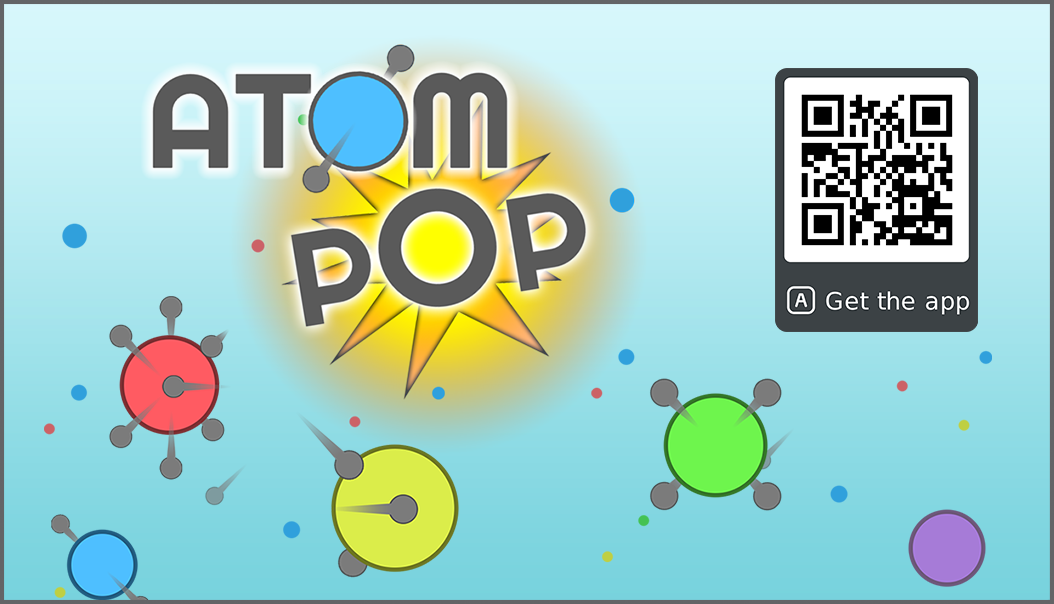

I designed and developed Atom Pop for iOS & Android as well as mobile prototyping for Smule’s music app Sing using the Unity in C#

Realtime VFX

I have many years of real-time visual effects experience in engines from Unity to Javascript (particle.js) to custom fx engines.

These are from Smule’s Sing app. Sing is a karaoke mobile app for iOS and Android. Singers can choose to augment their performance video or opt for stylized lyrics.

The visual fx can be particle, shader, or image based and algorithmically derived whenever possible.

I was in charge of turning the After Effects concepts into visual fx while minimizing memory footprint and maximizing performance.

IMVU Labs

Freefall, Heirloom, SuperDash, and The Hunt are some of the Unity games made to showcase the API we developed at IMVU. I headed this group of developers, designers and artists allowing the use of IMVU’s avatars within Unity experiences. (Art as well as code C#)

Utilizing multiple aspects of Unity’s Engine: Animation, Mecanim, Photon Networking, Finite State Machines, RagDoll Physics, Lighting, Particles / VFX, Terrian, Audio and UI to name a few.

3D Avatar World

I developed this prototype avatar world in Unity C# coding.

Initiating a partnership with DAZ to leverage their characters and morph system. I split their assets into categorized bundles, setup a database to store and retrieve the data, added terrain, other 3D assets, camera tracking, and HDR lighting.

I also developed movement, joystick controls, an item placement system and coded weather effects: rain, wind, lightning, fog, and time of day.

Shaders and Effects

This globe is an example of a shader I developed in Unity’s shader graph.

The input texture is a black and white image that defines the land and the water. The color gradients for each are a fresnel effect between two input colors (edge and front facing).

A pulse between poles is vertex displacement. Its timing is determined either by a song’s midi data or sound amplitude.

The behavior of the dancing particle is also defined by song parameters.

UI Prototyping

Developed this fully functional prototype to allow our designers to rapidly iterate their ui / ux concepts C#.

Using Unity we were able to rapidly test, make changes and retest within hours or days.

Here you see the integration of a 3D interactive globe that zooms to a google map, a shape definable and scrubbable progress bar, dual axis item selection, a working video mini-player, json data retrieval as well as particle fx, and customs shaders.

Virtual Reality

I worked with Morph3D (now Tafi) to integrate their Avatar API with VR Chat’s SDK. C# coding in Unity

This video shows avatar loading, retrieval of items from the server and camera focusing on the area of interest based on the selected category inside of VR Chat.

I developed this in Unity with the HTC Vive. The buttons are dynamically generated as categories are selected. All using Unity’s native ui. (buttons, scrollviews, render textures, etc)

Also worked on several VR projects with the Oculus Rift

Augmented Reality

For Smule’s AR initiative:

I developed 3D assets (modeling, texturing, lighting), worked with design and developers to define the pipeline using AR Kit. And rapid prototyped in Unity to define the look and explore possibilities. Unity C#

For IMVU:

I made a system to retrieve 3D items (asset bundles) from a server. Display them in an AR room seen through your phone. Then by clicking on an object you could place (move, scale, rotate or remove). Unity C#

Visuals Coming Soon